We Often Confuse Uncertainty With Risk

Or, we use the single word ‘probability’ to mean countless different things

2024 has been a very very hot year, and India has had its fair share of heatwaves. Considering how common they’ve become, here’s a question: what does it really mean when the forecast says there’s a 70% chance of a heatwave next week?

Are they saying that whenever similar weather conditions have prevailed, we’ve experienced a heatwave 70 out of 100 times? Or, are they saying that they’re not 100% sure, but only 70% confident that there would be a heatwave next week?

I cannot tell for sure. I’m sure (most of) the folks at the weather station cannot tell for sure as well.

Here’s another question: what does it mean for a forecast to be accurate? Is the forecast wrong if there’s no heatwave? That doesn’t seem fair, because 70% isn’t technically a sure thing.

Or, is the forecast correct only if, over time, with similar weather conditions, there’s a heatwave 70 out of 100 times? But that doesn’t make sense. The weather an hour from now might be similar, but it would become more and more uncertain the longer we gaze into the future. There’s a reason why you don’t try to forecast whether it would rain on your wedding day three months from now.

This question becomes even more difficult when we talk about unique events, such as elections. What did it mean when the exit polls predicted that there’s a 90% chance the BJP-led NDA would win over 350 seats in the 2024 general election in India?

Did it mean that if you rerun the election over and over, NDA wins 350+ seats 90% of the time? Okay, but there’s just one election, with one outcome, and no two elections are ever the same, even in theory. So, this claim is really of no use.

Did it mean that elections are like rolling a dice, but instead of a one-in-six chance, NDA’s chance of winning 350+ seats was 90 out of 100? When it didn’t happen, was the prediction itself wrong, or did the less likely outcome just happen? If it’s nothing surprising for a less likely outcome to happen, what’s even the point of the prediction?

When we say, “There’s an X percent chance that Y will happen,” we’re often making assumptions that can mean very different things. Saying “there’s a 70% chance Socrates was a real person” and “there’s a 50% chance a coin will land heads on the next toss” are both probabilistic statements. However, they’re very different kinds of claims.

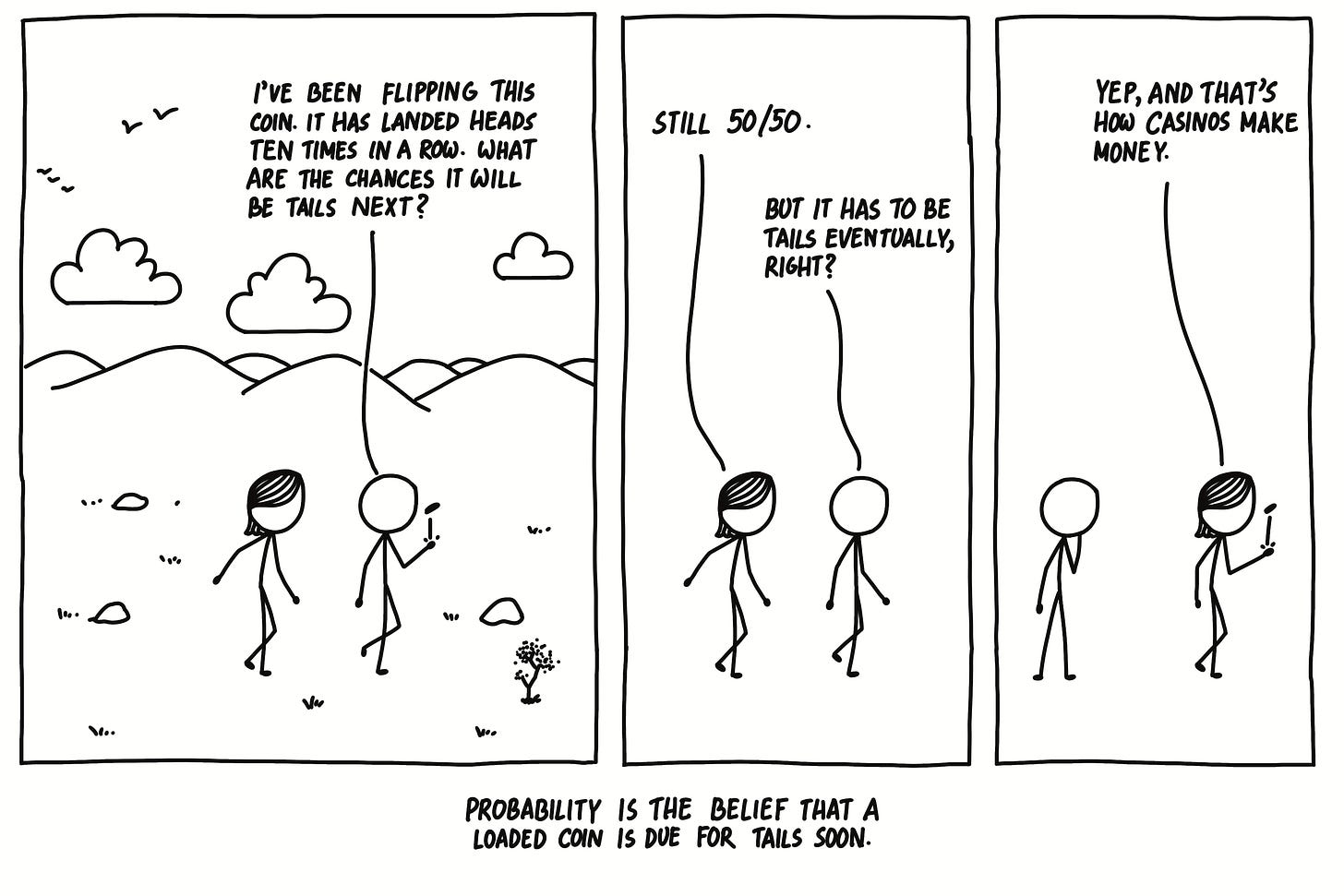

There are two main camps for probability statements: frequency-type and belief-type.

A frequency-type probability is based on how often an outcome will occur, particularly over the long run during repeated trials. For example, if you flip a coin a million times, you get heads about 50% of the time.

Belief-type probabilities are different. They express the degree of confidence you have in a specific claim. Socrates was either a real person or he wasn’t. There’s no way to repeat reality and see how many worlds Socrates exists in and how many he doesn’t. Any probabilistic statement about his existence is a belief-type probability. It’s just a best guess based on the evidence.

When we’re confronting a problem in a simple, controlled system — such as a roll of the dice, with six clearly defined possible outcomes — probabilistic reasoning works flawlessly. Unfortunately, for many of the important problems we face in life, those assumptions don’t apply. Real life is chaotic, and probability doesn’t work in chaos.

To understand this, I want to briefly discuss the difference between risk and uncertainty — which we often treat as the same, even though they are quite different.

Risk is when we don’t know what would happen, but we do know the possibilities. Tossing a dice is a matter of risk rather than uncertainty. We don’t know which number would land, but we do know that each number has a one-in-six chance.

Risk can be tamed. Uncertainty, by contrast, refers to situations in which we don’t know what will happen and we don’t have any way of assessing the likelihood of something happening. We’re completely in the dark.

On 29 September 2016, a team of Indian Army commandos crossed the Line of Control into Pakistan-Occupied Kashmir to take down a group of militants. The raid occurred ten days after militants had attacked an Indian army outpost at Uri in Jammu and Kashmir, and killed 19 soldiers.

So many unknowns in the situation: were the militants present in the territory? Would the raid succeed in killing them with minimal loss of life? Would the Pakistani government attack or denounce India for this? Would this increase militancy in future?

I don’t have the details of how they went about it. But I can imagine intelligence officers giving probabilistic estimates. “Based on our intelligence, there’s a 80% chance they’re there.” These are subjective, belief-based expressions of confidence.

The raid was a unique event, not like flipping a coin where you can expect similar results over time. The success of past raids couldn’t really predict whether this one would succeed. The response from Pakistan might depend on small things like how much sleep the Pakistani intelligence chief got the night before, who was in government, how the situation was explained to them, or even the mood of the generals in charge.

When uncertainty is produced, probability becomes useless. The decision had to be made in the face of uncertainty, not risk.

The world changes from moment to moment. Sometimes, these changes reach a tipping point where they create completely new cause-and-effect patterns. We can’t predict exactly when these big shifts happen.

Any forecast in 2000 about phone usage in 2020 would have been wildly off. The relationship between humans and phones fundamentally changed after the iPhone. Then, a once-in-a-century pandemic kept people bored at home. The world became different.

In a constantly changing world, the future is uncertain. We are bound to get lost when we use probability in the land of uncertainty.